SAP on Amazon Web Services (AWS)

SAP on Amazon Web Services (AWS)A collection of AWS SAP related resources. This is work in progress. Please revisit this page from time to time.

- 9797 views

AWS (Amazon Web Services) SAP Benchmarks in Cloud Environments

AWS (Amazon Web Services) SAP Benchmarks in Cloud EnvironmentsResources

- SAP Benchmarks in Cloud Environments

- SAP Defintion of Cloud Awareness

Benchmarks published

Two-tier Internet Configuration

| Certification Number | Date | Benchmark | Instances | OS |

|---|---|---|---|---|

| 2016021 | May 2016 | Sales and Distribution | x1.32xlarge instance | Windows Server 2012 R2 Standard Edition |

| 2015032 | July 2015 | Sales and Distribution | m4.10xlarge instance | Windows Server 2012 Standard Edition |

| 2015006 | Mar. 2015 | Sales and Distribution | c4.8xlarge instance | Windows Server 2012 Standard Edition |

| 2015005 | Mar. 2015 | Sales and Distribution | c4.4xlarge instance | Windows Server 2012 Standard Edition |

| 2014041 | Oct. 2014 | Sales and Distribution | c3.8xlarge instance | Windows Server 2012 Standard Edition |

| 2014035 | June 2014 | Sales and Distribution | r3.8xlarge instance | Windows Server 2012 Standard Edition |

| 2014010 | Mar. 2014 | Sales and Distribution | cr1.8xlarge instance | Windows Server 2008 R2 Datacenter |

Three-tier Internet Configuration

| Certification Number | Date | Benchmark | Instances | OS |

|---|---|---|---|---|

| 2013035 | Nov. 2013 | Sales and Distribution | 9 m2.4large instances | Windows Server 2008 R2 Datacenter |

SAP BW Enhanced Mixed Load (BW EML)

| Certification Number | Date | Benchmark | Ad-Hoc Navigation Steps/Hour | Instances | OS |

|---|---|---|---|---|---|

| 2014001 | Jan. 2014 | SAP BW Enhanced Mixed Load (BW EML) 500.000 rcords | 113390 | 1 cr1.8xlarge DB server + 2 c3.8xlarge appl. server instances | SuSE Linux Enterprise Server 11 |

| 2014013 | Apr. 2014 | SAP BW Enhanced Mixed Load (BW EML) 5.000.000 rcords | 137510 | 1 cr1.8xlarge DB server + 2 c3.8xlarge appl. server instances |

SuSE Linux Enterprise Server 11 (DB Server), Windows Server 2008R2 Datacenter Edition (app servers) |

| 2014014 | Apr. 2014 | SAP BW Enhanced Mixed Load (BW EML) 2.000.000.000 rcords | 177590 | 5 cr1.8xlarge DB server + 3 c3.8xlarge appl. server instances |

SuSE Linux Enterprise Server 11 (DB Server), Windows Server 2008R2 Datacenter Edition (app servers) |

- 7358 views

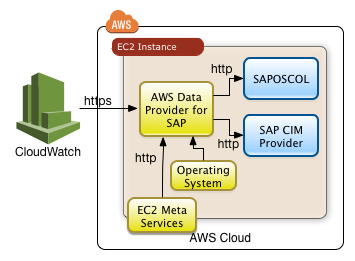

AWS Data Provider for SAP

AWS Data Provider for SAPResources

Users in the chinese region will have to use:

Testing the Collector

A well operating collector will operate a web server which reports the current status through a URL in the following form:

http://localhost:8888/vhostmd

The collector is supposed to bind against localhost only for security reasons.

- 5620 views

Data Provider Installation through AWS System Manager (for SLES)

Data Provider Installation through AWS System Manager (for SLES)Prerequistes

Execute the following two steps to enable an instance to be managed by System Manager:

- Add the AWS managed policy AmazonSSMAutomationRole to the role of the instance

- Install the System Manager agent according to the AWS documentation

Creation of System Manager Document

Use the AWS console.

Move to "System Manager"->"Documents". Create a new document with the following content:

{

"schemaVersion" : "2.2",

"description" : "Command Document Example JSON Template",

"mainSteps" : [ {

"action" : "aws:runShellScript",

"name" : "test",

"inputs" : {

"runCommand": [ "wget https://s3.amazonaws.com/aws-data-provider/bin/aws-agent_install.sh;",

"chmod ugo+x aws-agent_install.sh;",

"sudo ./aws-agent_install.sh;",

"curl http://localhost:8888/vhostmd"

],

"workingDirectory":"/tmp",

"timeoutSeconds":"3600",

"executionTimeout":"3600"

}

} ]

}

Save the document with the name SAP-Data-Provider-Installation-Linux.

Command Line Execution of the System Manager Document

New data providers can then be provisioned with the AWS console or the following AWS CLI command:

aws ssm send-command --document-name "SAP-Data-Provider-Installation-Linux" \ --comment "SAP Data Provider Installation" --targets "Key=instanceids,Values=i-my-instance-id" \ --timeout-seconds 600 --max-concurrency "50" \ --max-errors "0" --region my-region

Replace the variables

- i-my-instance-id with the instance id

- my-region with the matching region

- 1471 views

AWS Quickstarts for SAP

AWS Quickstarts for SAPAWS offers Cloudformation scripts to automate the installation of SAP applications.

| Name | Manual | Launch | github sources |

|---|---|---|---|

| SAP HANA | Deployment Guide | Launch | quickstart-sap-hana |

| Netweaver ABAP | Quick Start Reference Deployment | quickstart-sap-netweaver-abap |

- 1450 views

Command Line Creation of an AWS Instance for SAP HANA

Command Line Creation of an AWS Instance for SAP HANAThe bash script shown here allows to create SAP HANA instances from the command line. It uses the AWS CLI. The aws command needs to be in the search path.

Consider to use the AWS Quick Start for HANA deployment. It installs the AWS instance as well the HANA software.

The script below will create an AWS instance only. The script solves a number of issues for administrators:

- It tags the instance and all instance volumes. This simplifies the complete deletion of the instance

- All volumes are getting marked to be deleted when the instance gets deleted

- It takes a private IP address to be used as input parameter

- It takes the CIDR of the subnet in which the instance is supposed to be created.

- It enabled detailed monitoring (as requested by SAP)

- it will use the ebs-optimized flag to expedite IO

The limitations

- The script creates instances with a private IP address in a VPC.

- It doesn't check whether the IP address matches the CIDR

- It is currently using a disk configuration for r3.8xlarge SAP compliant systems

- It doesn't create VPS or subnets.

- It will use the region of the current profile of the AWC CLI

The script requires a file with the name disks.json. This file is currently configured to create a boot disk and 4 gp2 disks with 667GB.

Warning

This script will create AWS resources AWS will charge you for. Be careful using this script. I don't warrant anything

Preconditions

- Have the AWS Command Line Interface (CLI) installed on your system.

- Configure a AWS user in your AWS CLI profile which has the appropriate IAM profile to create EC2 instances and use a number of describe aws commands

- Have the bash shell installed on your system

Download

- createHANA.tar with both files

Using the Script

Provide all parameter when calling it in the following format:

./createHANA.sh ami-id pem-name instance-type ip-address cidr security-group "name tag"

Example:

./createHana.sh ami-6b5a5601 myPEM r3.4xlarge 10.79.7.95 10.79.7.0/24 mySecGroup "my-HANA-System95" *** 1. Prequisites checking *** 1.1 OK: requested IP address 10.79.7.95 is available *** 1.2 OK: requested CIDR 10.79.7.0/24 belongs to subnet-6b964f32 in us-east-1c , vpc-2e976742 *** 1.3 Warning: No check whether 10.79.7.95 fits into CIDR 10.79.7.0/24 ! *** 1.4 OK: requested security group mySecGroup is sg-582eec37 (Connect toLab) *** 1.5 OK: requested PEM myPEM exists. *** 1.6 OK: AMI ami-6b5a5601 exists (amazon/suse-sles-12-sp1-v20160322-hvm-ssd-x86_64) Do you want to create this instance? (y/n) Yes *** 2. About to create the instance *** 2.1 Created system with Id: i-9dd0e707 *** 2.2 Tagged system with Id: i-9dd0e707 with Name: my-HANA-System95 *** 2.3.1 Tagged all volumes from system with Id: i-9dd0e707 with Name: my-HANA-System95 *** 2.4 The created instance: i-9dd0e707 with Name: my-HANA-System95 RESERVATIONS 752040392274 r-684b74d9 INSTANCES 0 x86_64 None True xen ami-6b5a5601 i-9dd0e707 r3.4xlarge myPEM 2016-06-24T13:47:25.000Z None 10.79.7.95 None /dev/sda1 ebs True None subnet-6b964f32 hvm vpc-2e976742 BLOCKDEVICEMAPPINGS /dev/sda1 EBS 2016-06-24T13:47:26.000Z True attaching vol-8c836528 BLOCKDEVICEMAPPINGS /dev/sdf EBS 2016-06-24T13:47:26.000Z True attaching vol-0f8365ab BLOCKDEVICEMAPPINGS /dev/sdg EBS 2016-06-24T13:47:26.000Z True attaching vol-0e8365aa BLOCKDEVICEMAPPINGS /dev/sdh EBS 2016-06-24T13:47:26.000Z True attaching vol-fe83655a BLOCKDEVICEMAPPINGS /dev/sdi EBS 2016-06-24T13:47:26.000Z True attaching vol-e983654d BLOCKDEVICEMAPPINGS /dev/sdj EBS 2016-06-24T13:47:26.000Z True attaching vol-ac836508 MONITORING pending NETWORKINTERFACES None 0e:0f:2c:30:0b:b7 eni-522c1700 752040392274 10.79.7.95 True in-use subnet-6b964f32 vpc-2e976742 ATTACHMENT 2016-06-24T13:47:25.000Z eni-attach-c0de2d15 True 0 attaching GROUPS sg-582eec37 mySecGroup PRIVATEIPADDRESSES True 10.79.7.95 PLACEMENT us-east-1c None default SECURITYGROUPS sg-582eec37 mySecGroup STATE 0 pending TAGS Name my-HANA-System95

The second option to use the script is the interactive dialog:

./createHANA.sh

Enter AMI name:

ami-6b5a5601

Enter name of security key:

myPEM

Enter instance type:

r3.4xlarge

Enter IP address:

10.79.7.94

Enter CIDR n the format xxx..xxx.xxx.xxx/yy:

10.79.7.0/24

Enter security group name:

mySecGroup

Enter name tags for instance and volumes:

my-HANA-System94

*** 1. Prequisites checking

*** 1.1 OK: requested IP address 10.79.7.94 is available

*** 1.2 OK: requested CIDR 10.79.7.0/24 belongs to subnet-6b964f32 in us-east-1c , vpc-2e976742

*** 1.3 Warning: No check whether 10.79.7.94 fits into CIDR 10.79.7.0/24 !

*** 1.4 OK: requested security group mySecGroup is sg-582eec37 (Connect to Lab)

*** 1.5 OK: requested PEM myPEM exists.

*** 1.6 OK: AMI ami-6b5a5601 exists (amazon/suse-sles-12-sp1-v20160322-hvm-ssd-x86_64)

Do you want to create this instance? (y/n) Yes

*** 2. About to create the instance

*** 2.1 Created system with Id: i-30ddeaaa

*** 2.2 Tagged system with Id: i-30ddeaaa with Name: my-HANA-System94

*** 2.3.1 Tagged all volumes from system with Id: i-30ddeaaa with Name: my-HANA-System94

*** 2.4 The created instance: i-30ddeaaa with Name: my-HANA-System94

RESERVATIONS 752040392274 r-2a49769b

INSTANCES 0 x86_64 None True xen ami-6b5a5601 i-30ddeaaa r3.4xlarge myPEM 2016-06-24T13:53:11.000Z None 10.79.7.94 None /dev/sda1 ebs True None subnet-6b964f32 hvm vpc-2e976742

BLOCKDEVICEMAPPINGS /dev/sda1

EBS 2016-06-24T13:53:12.000Z True attaching vol-5a8d6bfe

BLOCKDEVICEMAPPINGS /dev/sdf

EBS 2016-06-24T13:53:12.000Z True attaching vol-b38a6c17

BLOCKDEVICEMAPPINGS /dev/sdg

EBS 2016-06-24T13:53:12.000Z True attaching vol-b28a6c16

BLOCKDEVICEMAPPINGS /dev/sdh

EBS 2016-06-24T13:53:12.000Z True attaching vol-5b8d6bff

BLOCKDEVICEMAPPINGS /dev/sdi

EBS 2016-06-24T13:53:12.000Z True attaching vol-4e8d6bea

BLOCKDEVICEMAPPINGS /dev/sdj

EBS 2016-06-24T13:53:12.000Z True attaching vol-198d6bbd

MONITORING pending

NETWORKINTERFACES None 0e:cc:0c:19:f4:b1 eni-621c2730 752040392274 10.79.7.94 True in-use subnet-6b964f32 vpc-2e976742

ATTACHMENT 2016-06-24T13:53:11.000Z eni-attach-0ec635db True 0 attaching

GROUPS sg-582eec37 mySecGroup

PRIVATEIPADDRESSES True 10.79.7.94

PLACEMENT us-east-1c None default

SECURITYGROUPS sg-582eec37 mySecGroup

STATE 0 pending

TAGS Name my-HANA-System94

The script createHANA.sh

#!/bin/bash

# version 1.0 June 24, 2016

# This script is using the AWS cli.

# It assumes that the aws command is part of the search path

AMI=$1

PEM=$2

INSTANCETYPE=$3

IP=$4

CIDR=$5

SGNAME=$6

NAMETAG=$7

case $1 in

-h | -help)p

echo "Use this command with the following options:"

echo "$0 -h : to obtain this output"

echo "$0 -help : to obtain this output"

echo "$0 : enter information through a dialog"

echo "$0 ami-id pem-name instance-type ip-address cidr security-group \"name tag\" "

echo "Example:"

echo " ./createHana.sh ami-6b5a5601 myPEM r3.4xlarge 10.79.7.96 10.79.7.0/24 mySecGroup \"my-HANA-System96\""

exit

;;

esac

if [[ -z $AMI ]]; then

echo "Enter AMI name:"

read AMI

fi

if [[ -z $PEM ]]; then

echo "Enter name of security key:"

read PEM

fi

if [[ -z $INSTANCETYPE ]]; then

echo "Enter instance type:"

read INSTANCETYPE

fi

if [[ -z $IP ]]; then

echo "Enter IP address:"

read IP

fi

if [[ -z $CIDR ]]; then

echo "Enter CIDR n the format xxx..xxx.xxx.xxx/yy:"

read CIDR

fi

if [[ -z $SGNAME ]]; then

echo "Enter security group name:"

read SGNAME

fi

if [[ -z $NAMETAG ]]; then

echo "Enter name tags for instance and volumes:"

read NAMETAG

fi

echo "*** 1. Prequisites checking"

EXISTINGIP=$(aws ec2 describe-network-interfaces --filter Name=private-ip-address,Values=$IP | awk -F\t '/PRIVATEIPADDRESSES/ {print $3}' | grep $IP)

if [ $EXISTINGIP ]

then

INSTID=$(aws ec2 describe-network-interfaces --filter Name=private-ip-address,Values=$IP | awk -F\t '/ATTACHMENT/ {print $6}')

echo "*** 1.1 ERROR: requested IP address $IP is already in use by instance $INSTID. Will stop here..."

exit 1

else

echo "*** 1.1 OK: requested IP address $IP is available"

fi

SUBNET=$(aws ec2 describe-subnets --filter Name=cidrBlock,Values=$CIDR | awk -F\t '/SUBNETS/ {print $8}')

AZ=$(aws ec2 describe-subnets --filter Name=cidrBlock,Values=$CIDR | awk -F\t '/SUBNETS/ {print $2}')

VPC=$(aws ec2 describe-subnets --filter Name=cidrBlock,Values=$CIDR | awk -F\t '/SUBNETS/ {print $9}')

if [ $SUBNET ]

then

echo "*** 1.2 OK: requested CIDR $CIDR belongs to $SUBNET in $AZ , $VPC"

else

echo "*** 1.2 ERROR: no subnet found for CIDR $CIDR . Will stop here..."

exit 1

fi

echo "*** 1.3 Warning: No check whether $IP fits into CIDR $CIDR !"

SECURITY=$(aws ec2 describe-security-groups --filters Name=group-name,Values=${SGNAME} | awk -F\t '/SECURITYGROUPS/ {print $3}')

SECURITYTEXT=$(aws ec2 describe-security-groups --filters Name=group-name,Values=${SGNAME} | awk -F\t '/SECURITYGROUPS/ {print $2}')

if [ $SECURITY ]

then

echo "*** 1.4 OK: requested security group $SGNAME is $SECURITY ($SECURITYTEXT)"

else

echo "*** 1.4 ERROR: requested security group $SGNAME not found. Will stop here"

exit 1

fi

PEMRESULT=$(aws ec2 describe-key-pairs --filters Name=key-name,Values=$PEM| awk -F\t '/KEYPAIRS/ {print $3}')

if [ $PEMRESULT ]

then

echo "*** 1.5 OK: requested PEM $PEM exists."

else

echo "*** 1.5 ERROR: requested PEM $PEM not found. Will stop here"

exit 1

fi

AMINAME=$(aws ec2 describe-images --image-ids $AMI | awk -F\t '/IMAGES/ {print $6}')

if [ $AMINAME ]

then

echo "*** 1.6 OK: AMI $AMI exists ($AMINAME)"

else

echo "*** 1.6 ERROR: AMI $AMI does not exist. Will stop here"

exit 1

fi

echo -n "Do you want to create this instance? (y/n) "

old_stty_cfg=$(stty -g)

stty raw -echo ; answer=$(head -c 1) ; stty $old_stty_cfg # Care playing with stty

if echo "$answer" | grep -iq "^y" ;then

echo Yes

else

echo No

exit

fi

echo "*** 2. About to create the instance"

ID=$(aws ec2 run-instances \

--key-name $PEM \

--instance-type $INSTANCETYPE \

--count 1 \

--block-device-mappings file://disks.json \

--image-id $AMI \

--monitoring Enabled=true \

--instance-initiated-shutdown-behavior stop \

--security-group-ids $SECURITY \

--subnet-id $SUBNET \

--private-ip-address $IP \

--ebs-optimized | \

awk '/INSTANCES/ {print $8}' \

)

echo "*** 2.1 Created system with Id: $ID"

aws ec2 create-tags --resources $ID --tags Key=Name,Value=${NAMETAG}

echo "*** 2.2 Tagged system with Id: $ID with Name: $NAMETAG "

#echo "*** 2.3.0 will sleep for 2s before tagging the volumes with $NAMETAG "

#sleep 2

aws ec2 describe-instances --instance-ids $ID | awk '/EBS/ {print "aws ec2 create-tags --resources " $5 " --tags Key=Name,Value='"$NAMETAG"'" }' | bash -

echo "*** 2.3.1 Tagged all volumes from system with Id: $ID with Name: $NAMETAG "

echo "*** 2.4 The created instance: $ID with Name: $NAMETAG "

aws ec2 describe-instances --instance-ids $ID

The file disks.json

This file has to be in the directory in which the script is called

[

{"DeviceName":"/dev/sda1",

"Ebs":{"VolumeSize":200,"VolumeType":"gp2",

"DeleteOnTermination":true}},

{"DeviceName":"/dev/sdf",

"Ebs":{"VolumeSize":667,"VolumeType":"gp2",

"DeleteOnTermination":true}},

{"DeviceName":"/dev/sdg",

"Ebs":{"VolumeSize":667,"VolumeType":"gp2",

"DeleteOnTermination":true}},

{"DeviceName":"/dev/sdh","Ebs":{"VolumeSize":667,"VolumeType":"gp2",

"DeleteOnTermination":true}},

{"DeviceName":"/dev/sdi","Ebs":{"VolumeSize":667,"VolumeType":"gp2",

"DeleteOnTermination":true}},

{"DeviceName":"/dev/sdj","Ebs":{"VolumeSize":50,"VolumeType":"gp2",

"DeleteOnTermination":true}}

]

Feedback

The script is limited. Leave a comment to get in touch with me. I'll be happy to improve the script and integrate a better coding.

- 3228 views

Configuring SAProuter (as a service) on Linux

Configuring SAProuter (as a service) on LinuxInstalling a saprouter on Linux is straight forward.

... at least without using SNC.

SAP Routers can be used to

- connect your production system to SAP Remote Services

- route traffic of on premises SAP GUI users to a peered VNC

- Allow on premises SAP GUI users to reach highy available SAP systems which use an overlay IP address.

The playbook for the installation is

- Create files for services, the installation, a saprouting table file

- Copy all files to a private S3 bucket

- Create a policy which allows the instance to pull the files from the S3 bucket

- Use an AWS CLI command to create an instance which will automatically install the saprouter

Have a routing table file for saprouter

Create a configuration file with the name saprouttab. The simplest one which means: route all ABAP traffic in all directions is a file with the name /usr/sap/saprouter/saprouttab with the content:

P * * *

This means: P(ermit) ALL SOURCE IP/HOSTNAMES to ALL DESTINATION IP/HOSTNAMES using a PORT-RANGE from 3200 – 3299

Create a Policy which grants Access to an S3 Bucket to Download all required Software

Create a policy which looks like the following:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::bucket-name/bucket-folder/*"

},

{

"Effect": "Allow",

"Action": ["sS:ListBucket","S3:HeadBucket"],

"Resource": "arn:aws:s3:::bucket-name"

}

]Replace the following variables with you individual settings

- bucket-name: the name of the bucket which stores all files to be downloaded

- bucket-folder: The subfolder which contains your download information. It is an optional part

Add this policy to a new role.

Attach the role to the instance when you will create it.

Creation of a Service

SLES 12, 15 or Red Hat will need a service to restart the saprouter whenever needed. Create a file saprouter.service:

[Unit] Description=SAP Router Configuration After=syslog.target network.target [Service] Type=simple RemainAfterExit=yes WorkingDirectory=/usr/sap/saprouter ExecStart=/usr/sap/saprouter/saprouter -r ExecStop=/usr/sap/saprouter/saprouter -s KillMode=none Restart=no [Install] WantedBy=multi-user.target

Start the service with the commands:

systemctl daemon-reload systemctl enable saprouter.service systemctl start saprouter.service

Create an Installation Script

Create a file install.sh:

#!/usr/bin/env bash

# version 0.2

# December, 2018

## Run script as super user:

# This script needs one parameter, the URL to access the S3 bucket

# with all downloadble files

# Use the notation s3:my-bucket/myfolder

BUCKET=$1

SAPSAPROUTTAB="saprouttab"

SERVICE="saprouter.service"

ROUTDIR="/usr/sap/saprouter"

echo "*** 1. Create /usr/sap/saprouter"

mkdir -p ${ROUTDIR}/install

echo "*** 2. Download files"

aws s3 sync ${BUCKET} ${ROUTDIR}/install

cd ${ROUTDIR}/install

# All files will become lowe case files

for f in `find`; do mv -v "$f" "`echo $f | tr '[A-Z]' '[a-z]'`"; done

chmod u+x ${ROUTDIR}/install/${SAPCAR}

chmod u+x uninstall.sh

mv uninstall.sh ..

mv ${SERVICE} /etc/systemd/system/${SERVICE}

for f in `find . -name saprouter*.sar`; do mv -v $f saprouter.sar; done

for f in `find . -name sapcryptolib*.sar`; do mv -v $f sapcryptolib.sar; done

for f in `find . -name sapcar*`; do mv -v $f sapcar; done

chmod u+x sapcar

mv saprouttab ..

echo "*** 3. Unpack files"

cd ${ROUTDIR}

./install/sapcar -xf ${ROUTDIR}/install/saprouter.sar

./install/sapcar -xf ${ROUTDIR}/install/sapcryptolib.sar

echo "*** 4. Start service"

systemctl daemon-reload

systemctl enable ${SERVICE}

systemctl start ${SERVICE}

echo "5. Done..."

The file will work if there are three unique files in the download bucket which are the onlyones with names like sapcar*, sapcrypto*.sar and saprouter*.sar. Capitalztion will not matt Update the bucket-name and the bucket-folder variables matching your individual needs.

Create a De-installation Script

Create a file withe the name uninstall.sh:

#!/usr/bin/env bash # version 0.1 # December, 2018 ## Run as super user: echo "1. Stopping and disabling service" systemctl stop saprouter.service systemctl disable saprouter.service systemctl daemon-reload echo "2. Removing files" rm /etc/systemd/system/saprouter.service rm -rf /usr/sap/saprouter echo "3. Completed deinstallation"

Files Upload

Upload the following files to the S3 bucket:

- sapcar

- Cryptolib installation file

- saprouter installation file

- saprouttab

- install.sh

- uninstall.sh

- saprouter.service

There is no need to make this bucket public. The instance will have an IAM profile which entitles the instance to download the files needed.

Create a UserData file on your Adminstration PC

Create a file prep.sh:

Content-Type: multipart/mixed; boundary="//" MIME-Version: 1.0

--//

Content-Type: text/cloud-config; charset="us-ascii"

MIME-Version: 1.0

Content-Transfer-Encoding: 7bit

Content-Disposition: attachment; filename="cloud-config.txt"

#cloud-config

cloud_final_modules:

- [scripts-user, always]

--//

Content-Type: text/x-shellscript; charset="us-ascii"

MIME-Version: 1.0

Content-Transfer-Encoding: 7bit

Content-Disposition: attachment; filename="userdata.txt"

#!/bin/bash

BUCKET="s3://bucket-name/bucket-folder"

# take a one scond nap before moving on...

sleep 1

aws s3 cp ${BUCKET}/install.sh /tmp/install.sh

chmod u+x /tmp/install.sh

/tmp/install.sh $BUCKET

--//

Replace bucket-name and bucket-folder with the appropriate values.

This file will get executed when the instance will get created.

Installation of Instance

The following script will launch an instance with an automated saprouter installation. It assumes that

- The local account has the AWS CLI (Command Line Interface) configured

- The AMI-ID is one of a SLES12 or SLES 15 AMI available in the region (image-id parameter)

- There is security group which has the appropriate ports open (security-group-ids parameter)

- The file prep.sh is in the directory where the command gets launched

- There is subnet with Internet access and access to the SAP systems (subnet-id parameter)

- There is an IAM role which grants access to the appropriate S3 bucket (iam-instance-profile parameter)

- aws-key an the AWS key which allows to login through ssh. It needs to exist upfront

The command is

aws ec2 run-instances --image-id ami-XYZ \

--count 1 --instance-type m5.large \

--key-name aws-key \

--associate-public-ip-address \

--security-group-ids sg-XYZ \

--subnet-id subnet-XYZ \

--iam-instance-profile Name=saprouter-inst \

--tag-specifications 'ResourceType=instance,Tags=[{Key=Name,Value=PublicSaprouter}]' \

--user-data file://prep.shThis command will create an instance with

- a public IP address

- a running saprouter

- a service being configured for the saprouter

- SAP Cryptolib currently gets unpacked but not configured (stay tuned)

Installation as VPC internal saprouter as a proxy to relay traffic from on-premises users

Omit the parameter --associate-public-ip-address. This parameter creates a public IP address. You don't want this for an internal saprouter.

Installation with the help of an AWS Cloudformation template

Use this template (saprouter.template). It works with SLES 12SP3. Replace the AMIs if you need a higher revision.

- Upload the template to an S3 bucket

- Upload the SAP installation media and the file saprouttab to a S3 bucket

- Execute the file in CloudFormation

Warning: Please check the template upfront. It'll allocate resources in your AWS account. It has the potential to do damage.

More Information

Consult the SAP documentation to configure SNC or more detailed routing entries.

- 10981 views

HANA Cheat Sheet

HANA Cheat SheetStarting and stopping HANA

Start HANA instance with hostctrl as root:

/usr/sap/hostctrl/exe/sapcontrol -nr <instance number> -function Start

Stop HANA instance with hostctrl as root:

/usr/sap/hostctrl/exe/sapcontrol -nr <instance number> -function Stop

Start HANA as <sid>adm:

/usr/sap/<SID>/HDB<instance number>/HDB start

Example: /usr/sap/KB1/HDB26/HDB start Stop the SAP HANA system as <sid>adm by entering the following command:

/usr/sap/<SID>/HDB<instance number>/HDB stop

HANA Backups Command Line

Systems with XSA may have multiple tenants which need to get all backed up. Example as

$ hdbsql -u system -d systemdb -i 00 "BACKUP DATA USING FILE ('backup')"

$ hdbsql -u system -d systemdb -i 00 "BACKUP DATA FOR HDB USING FILE ('backup')"

- 2754 views

High Availability Solutions for SAP on AWS

High Availability Solutions for SAP on AWSThe SAP on Amazon Web Services High Availability Guide describes Windows and Linux architectures with failover scenarios.

This page focuses on solutions which can automatically fail over SAP services from one AWS server to another.

The AWS cloud implements high availability in a different way traditional on premises implementations do:

- A failing instance can be restarted automatically. AWS will provide automatically the required resources at restart after the instance became unavailable. There is no need to have standby spare instances.

- AWS regions provide multipe availability zones with are far enough apart to not fail through the same desasters and close enough to provide low latency, high bandwidth connections. A SAP customer will want to leverage architectures which are able to exploit the completly independent availibility zones. Using two independent availability zones in such a "metro-cluster" setup is typically very expensive to implement in an on premises setup.

- HA solutions can be implemented the same way in all AWS regions. AWS provides a homogenous infrastructure which allows to operate HA systems in all regions of the world.

SAP has a list of certified HA-Interface Partners. AWS is not part of this list since the certified HA-Interface Partners use the AWS platform as supported configurations. The following partners and solutions are known to support the AWS platform:

- 6970 views

NEC Express Cluster 3.3

NEC Express Cluster 3.3Product: NEC Express Cluster 3.3 (Product landing page)

Failover Services: HANA Scale Up data bases on Red Hat Linux

Licensing: NEC licenses depending on the services

Status: released, supported

The NEC Cluster relies on the SAP HANA system replication. It works across AWS availability zones within a region.

The NEC cluster uses AWS Overlay IP addresses which support a fast failover. The NEC Cluster will not shut down a node which isn't providing anymore the service. It will fail over to the standby node.

More Resources

- Documentation: EXPRESSCLUSTER X 3.1 HA Cluster Configuration Guide for Amazon Web Services

- Documentation: EXPRESSCLUSTER X 3.1 for Linux SAP NetWeaver System Configuration Guide

- SAP Note 1768213: Support details for NEC EXPRESSCLUSTER

- SAP Note 1841837: Support Details for NEC EXPRESSCLUSTER Support on SAP NetWeaver Systems

- SAP Note 2302728: Supported scenarios with NEC Expresscluster on Amazon Web Services

- SCN Article with AWS mention: High Availability with NEC Express Cluster

AWS Specific Configuration Details

Be aware that the NEC cluster will change the network topology. The privileges required for these operations allow to change the AWS network topology in an account. Verify and test all entries very carefully. Limit access to user working on the NEC Express cluster nodes to the required minimum.

Required Routing Entries

The NEC Cluster will typically operate in a single VPC. The cluster nodes are typically located in different availability zones for increased availability. Therefore thew will have their primary IP addresses in different subnets.

The AWS overlay IP addresses are based on a concept which allows to create routing entries which point traffic to an IP address (NEC cluster node). The NEC Express Cluster will change these routing entries when needed. It will however not create the routing entries. The initial creation of the routing entries needs to happen manually. The same routing entry will have to be created in all routing tables of the given VPC.

The AWS VPC console can be used to add this entry. The AWS Command Line Interface offers the following command as well:

ec2addrt ROUTE_TABLE -r CIDR -i INSTANCE

The user will have to pick an arbitrary AWS instance id from a cluster node as option -i. The NEC Express cluster will then update this entry as needed.

The NEC cluster will only operate in a correct way if the routing entry in all routing tables of the VPC have been created!

AWS Instance Configuration for Cluster Nodes

The AWS cluster nodes will have to be able to communicate through a second IP address. The document IP Failover with Overlay IP Addresses on this site describe how to disable the source/destination check for AWS instances and how to host a second IP address on the same Linux system.

IAM Policies: NEC-HA-Policy

The cluster nodes will require the following privileges to operate:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Stmt1424870324000",

"Effect": "Allow",

"Action": [

"ec2:DescribeInstances",

"ec2:DescribeInstanceAttribute",

"ec2:DescribeTags",

"ec2:DescribeVpcs",

"ec2:DescribeNetworkInterfaces",

"ec2:DescribeAvailabilityZones"

],

"Resource": "*"

},

{

"Sid": "Stmt1424860166260",

"Action": [

"ec2:CreateRoute",

"ec2:DeleteRoute",

"ec2:DescribeRouteTables",

"ec2:ReplaceRoute"

],

"Effect": "Allow",

"Resource": "*"

}

]

}

- 3390 views

Red Hat Pacemaker for SAP Applications

Red Hat Pacemaker for SAP ApplicationsRed Hat supports the protection of SAP HANA DB starting with Red Hat 7.4 on AWS .

Access to documentation requires a Red Hat customer account with the appropriate entitlement. Please read:

- Installing and Configuring a Red Hat Enterprise Linux 7.4 (and later) High-Availability Cluster on Amazon Web Services

- Configure SAP HANA System Replication in Pacemaker on Amazon Web Services

- Configure SAP Netweaver ASCS/ERS with Standalone Resources on Amazon Web Services (AWS)

- SAP note: 2765525 - Red Hat Enterprise Linux High Availability Add-On on AWS for SAP NetWeaver and SAP HANA

- 1657 views

Bad Hair Days (with Red Hat Pacemaker)

Bad Hair Days (with Red Hat Pacemaker)This page documents known problems with the Red Hat Pacemaker cluster. The problems typically arise from incorrect configurations...

Symptom: Virtual IP Service doesn't start

Problem: A manual start leads to the following problem:

[root@myNode1 ~]# pcs resource debug-start s4h_vip_ascs20 --full

... ...

> stderr: Unknown output type: test

> stderr: WARNING: command failed, rc: 255

Solution: Fix AWS CLI configuration. The output format may be wrong. It has to be text.

[root@myNnode1 ~]# aws configure

AWS Access Key ID [None]:

AWS Secret Access Key [None]:

Default region name [us-east-1]:

Default output format [test]: text

- 785 views

SUSE SLES for SAP

SUSE SLES for SAPProduct: SLES for SAP 12 (Product landing page)

Failover Services: HANA Scale Up databases and Netweaver central systems

Licensing: Bring your own SUSE subscription or use the AWS Marketplace SUSE Linux Enterprise Server for SAP Applications 12 SP3 offering.

Status: Full support starting with SLES for SAP 12 SP1

This product relies on the SAP HANA system replication. It will monitor the master and the slave node for health. The Linux Cluster will failover a service IP address to the previous slave node when needed. The fencing agents will then reboot the previouse master node.

See:

- SAP NetWeaver High Availability Cluster 7.40 for the AWS Cloud - Setup Guide

- SUSE Linux Enterprise Server for SAP Applications 12 SP3 for the AWS Cloud - Setup Guide

More Resources:

- Technical presentation SUSECon 2015: Fast SAP HANA Fail Over Architecture with a SUSE High Availability Cluster in the AWS Cloud

- 15 minutes video showing an automated failover

- SAP note: (1765442) Joined support SAP SUSE (SLES High Availability)

- SAP note: (2309342) SUSE Linux Enterprise High Availability Extension on AWS

- SAP note: (1763512) Supportdetails für SUSE Linux Enterprise High Availability

- SUSE Setup Guide:

- SLES 11 (no AWS support):Automate your SAP HANA System Replication Failover

- SLES for SAP 12 SP1(with AWS Support): SAP HANA SR Performance Optimized Scenario

- Agent sources (not inidvidually required when SLES for SAP is being used)

- Open source AWS fencing agent in github

- Open source AWS move ip agent in github

- AWS Quickstart to install SLES HAE with HANA DB

- 5815 views

Trouble Shooting the Configuration

Trouble Shooting the ConfigurationVerification and debugging of the aws-vpc-move-ip Cluster Agent

As root user run the following command using the same parameters as in your cluster configuration:

# OCF_RESKEY_address=OCF_RESKEY_routing_table= OCF_RESKEY_interface=eth0 OCF_RESKEY_profile=cluster OCF_ROOT=/usr/lib/ocf /usr/lib/ocf/resource.d/suse/aws-vpc-move-ip monitor

Stop the overlay IP Address to be hosted on a given Node

# OCF_RESKEY_address=<virtual_IPv4_address> OCF_RESKEY_routing_table=<AWS_route_table> OCF_RESKEY_interface=eth0 OCF_RESKEY_profile=cluster OCF_ROOT=/usr/lib/ocf /usr/lib/ocf/resource.d/suse/aws-vpc-move-ip stop

# OCF_RESKEY_address=<virtual_IPv4_address> OCF_RESKEY_routing_table=<AWS_route_table> OCF_RESKEY_interface=eth0 OCF_RESKEY_profile=cluster OCF_ROOT=/usr/lib/ocf/usr/lib/ocf/resource.d/suse/aws-vpc-move-ip start

Start the overlay IP Address to be hosted on a given Node

As root user run the following command using the same parameters as in your cluster configuration:

# OCF_RESKEY_address=<virtual_IPv4_address> OCF_RESKEY_routing_table=<AWS_route_table> OCF_RESKEY_interface=eth0 OCF_RESKEY_profile=<AWS-profile> /usr/lib/ocf/resource.d/suse/aws-vpc-move-ip start

Check DEBUG output for error messages and verify that the virtual IP address is active on the current node with the command ip a.

Testing the Stonith Agent

The Stonith agent will shutdown the other node if he thinks that this node isn't anymore reachable. The agent can be called manually as super user on a cluster node 1 to shut down cluster node 2. Use it with the same parameter as being used in the Stoneith agent configuration:

# stonith -t external/ec2 profile=<AWS-profile> port=<cluster-node2> tag=<aws_tag_containing_hostname> -T off <cluster-node2>

This command will shutdown cluster node 2. Check the errors reported during execution of the command if it's not going to work as planned.

Re-start cluster node 2 and test STONITH the other way around.

The parameter used here are:

- AWS-profile : The profile which will be used by the AWS CLI. Check the file ~/.aws/config for the matching one. Using the AWS CLI command aws configure list will provide the same information

- cluster-node2: The name or IP address of the other cluster node

- aws_tag_containing_hostname: The is the name of the tag of the EC2 instances for the two cluster nodes. We used the name pacemaker in this documentation

Checking Cluster Log Files

Check the file: /var/log/cluster/corosync.log

Useful Commands

As super user:

| crm_resource -C | Reset warnings showing up in the command crm status |

| crm configure edit | Configure all agents in vi |

| crm configure property maintenance-mode=true | Set Pace Maker in maintenance mode. This allows to reconfigure, start, stop, resync. SAP HANA |

| crm configure property maintenance-mode=false | Bring Pace Maker from maintenance mode back into controlling, production mode. Allow Pace Maker to explore the current configuration. This can take a few seconds. |

SAP HANA related commands (as <SAP>adm user)

| hdbcons -e hdbindexserver 'replication info' | Check whether HANA is replicating, detailed |

| hdbnsutil -sr_state | Check whether HANA is replicating. Show the master, slave relationship |

| SAPHanaSR-showAttr | Cluster tool which checks the current configuration. Run as super user |

- 2794 views

Bad Hair Days (with SLES for SAP)

Bad Hair Days (with SLES for SAP)Bugs I ran into:

Symptom: Virtual IP Address doesn't get hosted

Manual testing of virtual IP address agent (start option) creates the following output:

INFO: EC2: Moving IP address 192.168.10.22 to this host by adjusting routing table rtb-xxx INFO: monitor: check routing table (API call) DEBUG: executing command: /usr/bin/aws --profile cluster --output text ec2 describe-route-tables --route-table-ids rtb-xxx DEBUG: executing command: ping -W 1 -c 1 192.168.10.22 WARNING: IP 192.168.10.22 not locally reachable via ping on this system INFO: EC2: Adjusting routing table and locally configuring IP address DEBUG: executing command: /usr/bin/aws --profile cluster ec2 replace-route --route-table-id rtb-xxx --destination-cidr-block 192.168.10.22/32 --instance-id i-1234567890 DEBUG: executing command: ip addr delete 192.168.10.22/32 dev eth0 RTNETLINK answers: Cannot assign requested address WARNING: command failed, rc 2 INFO: monitor: check routing table (API call)

The host can't add the IP address to eth0

Problem: SUSE netconfig hasn't been disabled

Solution: Set CLOUD_NETCONFIG_MANAGE='no' in /etc/sysconfig/network/ifcfg-eth0

Symptom: Virtual IP Address gets removed after some minutes

corosyn logs show a line like:

rsc_ip_XXX_XXXX_start_0:17147:stderr [ An error occurred (UnauthorizedOperation) when calling the ReplaceRoute operation: You are not authorized to"Problem: The instance does not have the right to modifiy routing tables

Solution: The virtual IP address policy has a problem. It may be missing. It may have a typo. Another policy may disallow access to routing tables.

Symptom: Nodes fence each other

The log file shows lines like:

2018-10-11T11:14:06.597541-04:00 my-hostname pengine[1234]: error: Resource rsc_ip_ABC_DEF01 (ocf::aws-vpc-move-ip) is active on 2 nodes attempting recovery 2018-10-11T11:14:06.597766-04:00 my-hostname pengine[1234]: warning: See http://clusterlabs.org/wiki/FAQ#Resource_is_Too_Active for more information.

Problem: There is a bug is the aws-vpc-move-ip agent. The monitoring has a glitch. The cluster thinks that both sides host the IP address on eth0 and they fence each other.

Solution: Update the package in question. Contact SUSE if this doesn't work or...

Modify all aws-vpc-move-ip resources in your CIB by adding monapi=true to the parameters of each aws-vpc-move-ip resource.

Symptom: Nodes fence each other

Both nodes shut down. The corosync log looks like:

Jan 07 07:31:17 [4750] my-hostname corosync notice [TOTEM ] A processor failed, forming new configuration.

Jan 07 07:31:25 [4750] my-hostname corosync notice [TOTEM ] A new membership (w.x.y.z:52) was formed. Members left: 2

Jan 07 07:31:25 [4750] my-hostname corosync notice [TOTEM ] Failed to receive the leave message. failed: 2

Problem: The corosync token didn't arrive for 6 times within 5 seconds. Check whether the communication in between the two servers works as intented or...

Solution: Increase the following corosync parameter:

- token: from 5000 to 30000

- consensus: from 7500 to 32000

- token_retransmits_before_loss_const: from 6 to 10

Decrease these parameters later on as long as the cluster runs stable. These changes have the following impact:

- The cluster will give up on coroysnc communication after (token) 30 seconds

- The time out for an individual token gets increased to token/retransmit : 30000ms/10 = 3s

- The cluster will attempt (token_retransmits_before_loss_const) 10 times to reestablish communication instead of 6 times

- The consensus parameter has to be larger than the token parameter

This configuration will increase the time for a cluster to recognize the communication failure and take over!

Symptom: Virtual IP Address gets removed after some minutes

corosync logs show a line like:

rsc_ip_XXX_XXXX_start_0:17147:stderr [ An error occurred (UnauthorizedOperation) when calling the ReplaceRoute operation: You are not authorized to"Problem: The instance does not have the right to modifiy routing tables

Solution: The virtual IP address policy has a problem. It may be missing. It may have a typo. Another policy may disallow access to routing tables.

Symptom: Both nodes shut down after a while

The log file shows lines like:

2018-10-12T08:33:10.477900-04:00 xxx stonith-ng[2199]: warning: fence_legacy[32274] stderr: [ An error occurred (UnauthorizedOperation) when calling the StopInstances operation: You are not authorized to perform this operation. Encoded authorization failure message: Q5Edo8F0xvippgHSKd11QKshu_Hhc3Z8Es_D9O4PYkrLrqY_o6ziaM0JkUrCwadpplJsJreOGxwCTEGd-f68XYc82Dz- HqBZmIrwacTFsYxa0fAQLOA6stHTc2OolBqD-X-HsKZ-bOMjAXs69RT04MRAgNVWJPXeAtq4PHZqN5nne8ocnsshgCt_5xkdjGnxp5VsfzE6o75OUtdHKtblq- 8MokX1ItkZKdohocthhQdQyhGlG8HT1loxdDSuG50LE-kHwGo1slNnZOa-Rw3rPKi0tNzpPvDvlMR3_OXwyC

2018-10-12T08:33:10.478589-04:00 xxx stonith-ng[2199]: error: Operation 'poweroff' [32274] (call 56 from crmd.2205) for host 'haawnulsmqaci' with device 'res_AWS_STONITH' returned: -62 (Timer expired)

2018-10-12T08:33:10.478793-04:00 xxx stonith-ng[2199]: warning: res_AWS_STONITH:32274 [ Performing: stonith -t external/ec2 -T off xxx ]

2018-10-12T08:33:10.478978-04:00 xxx stonith-ng[2199]: error: Operation poweroff of haawnulsmqaci by awnulsmqaci for crmd.2205@awnulsmqaci.98fa9afe: Timer expired

2018-10-12T08:33:10.479151-04:00 xxx crmd[2205]: notice: Stonith operation 56/53:87:0:c76c1861-5fd3-4132-a36c-8f22794a6f1b: Timer expired (-62)

2018-10-12T08:33:10.479340-04:00 xx crmd[2205]: notice: Stonith operation 56 for haawnulsmqaci failed (Timer expired): aborting transition.

Problem: A node can't shut down the other since the stonith policies are missing or not being configured appropriately

Solution: Add the stonith policy as indicated in the installation manual. Make sure that the policy is using the appropriate AWS instance ids. Test them individually!

Symptom: Confusing messages after crm configure commands

Example:

host01:~ # crm configure property maintenance-mode=false WARNING: cib-bootstrap-options: unknown attribute 'have-watchdog' WARNING: cib-bootstrap-options: unknown attribute 'stonith-enabled' WARNING: cib-bootstrap-options: unknown attribute 'placement- strategy' WARNING: cib-bootstrap-options: unknown attribute 'maintenance- mode'

Problem: This is a bug in crmsh. See: https://github.com/ClusterLabs/crmsh/pull/386 . It shouldn't affect functionality.

Solution: Wait for fix

Symptom: Cluster loses quorum after on node leaves the cluster

Problem: A cluster starts but it breakes the quorum

The corosync-quorum-tools lists the following incorrect status:

# corosync-quorumtool

(...)

Votequorum information

----------------------

Expected votes: 2

Highest expected: 2

Total votes: 2

Quorum: 2 --> Quorum

Flags: Quorate

A correctly configured cluster will show the following output:

# corosync-quorumtool

(...)

Votequorum information

----------------------

Expected votes: 2

Highest expected: 2

Total votes: 2

Quorum: 1 --> Quorum

Flags: 2Node Quorate WaitForAll

Solution: Fix typo in corosync configuration.

One line is probably incorrect. It may look like

two_nodes: 1

Remove the plural s and change it to

two_node: 1

- 1399 views

Checklist for the Installation of SAP Central Systems with SLES HAE

Checklist for the Installation of SAP Central Systems with SLES HAEThis check list is supposed to help with the installation of SAP HAE for ASCS protection.

The various identifiers will be needed at different stages of the installation. This check list should be complete before the SAP and the SLES HAE installation begins.

Tip: Click on "Generate printer friendly layout" at the bottom of the page before you print this file.

| Item | Status/Value |

|---|---|

|

SLES subscription and update status

|

|

|

AWS User Privileges for the installing person

|

|

|

VPC

|

|

| Subnet id A for systems in first AZ | |

| Subnet id B for systems in second AZ | |

Routing table id for subnet A and B

|

|

|

Optional:

|

|

AWS Policies Creation

|

|

|

First cluster node (ASCS and ERS)

|

|

Second cluster node (ASCS and ERS)

|

|

PAS system

|

|

AAS system

|

|

DB system (is potentially node 1 of a database failover cluster)

|

|

|

Overlay IP address: service ASCS

|

|

Overlay IP address: service ERS

|

|

Optional: Overlay IP address DB server

|

|

|

Optional: Route 53 configuration

|

|

|

Creation of EFS filesytem

|

|

|

All instance have Internet access

|

- 1694 views

Open Source Agents being used by SLES-for-SAP

Open Source Agents being used by SLES-for-SAPSUSE is a dedicated Open Source provider. SUSE tends to uses agents being published Upstream in the ClusterLabs Open Source project.

The Open Source agents being published via SLES-for-SAP are the only ones with SUSE support. Customers have evergrowing requirements. SUSE and AWS work on improving the agents.

This page lists the ClusterLabs agents as well as experimental agents without support.

| Name | location in SLES file system | Github sources | as of Github commit | Comment | Shortcomings |

|---|---|---|---|---|---|

| STONITH agent | /usr/lib64/stonith/plugins/external/ec2 | ec2 | 34a217f on ~ Aug 6, 2018 |

Stops and monitors EC2 instances. This version is filtering the EC2 commands which has the following advantages

|

Cosmetic: The --text option in AWS CLI command is missing. This would lower the risk of configuration errors with the AWS profile SUSE Bug 1106700: - AWS: ec2 agent has fixes implemented upstream |

| Move Overlay IP | /usr/lib/ocf/resource.d/suse/aws-vpc-move-ip | aws-vpc-move-ip | 7ac4653Sept. 4, 2018 | Reassign an AWS Overlay IP address in a routing table |

Heads up: This agent is not compatible to the proprietary agent from SUSE. SUSE uses a parameter with the name address. The upstream version uses the parameter name ip. I haven't yet been able to make this agent work in a SUSE cluster :-( |

| Route 53 | /usr/lib/ocf/resource.d/heartbeat/aws-vpc-route53 | aws-vpc-route53.in | 7632a85 ~August 6, 2018 | Update a record in an AWS Route 53 hosted zone (DNS server) |

calls of ec2metadata will fail if the AWS user data contains strings like "local-ipv4". This can happen in specific AWS Quickstart implementations Bug 1106706 - AWS: Route 53 agent has fixes implemented upstream |

There is an ongoing discussion about updating the agents. Here are some experimental agents without any SUSE support.

| Name | location in SLES file system | Github sources | as of Github commit | Comment | Shortcomings |

|---|---|---|---|---|---|

| Move Overlay IP | /usr/lib/ocf/resource.d/suse/aws-vpc-move-ip | ...soon here... | . | Reassign an AWS Overlay IP address in a routing table | New monitoring doesn't work when a cluster node rejoins a cluster. Use the old monitoring mode by adding the parametermonapi="true" to the primitive. Monitoring function got updated. New mode works. No parameter needed |

| Route 53 | /usr/lib/ocf/resource.d/heartbeat/aws-vpc-route53 | aws-vpc-route53 | 319ba06 on 2 Jul, 2018 | Update a record in an AWS Route 53 hosted zone (DNS server) | calls of ec2metadata will fail if the AWS user data contains strings like "local-ipv4". This can happen in specific AWS Quickstart implementations. The implementation ofec2metadata has been replaced with a more specific implementation |

- 1236 views

SLES HAE Cluster Tests with Netweaver on AWS

SLES HAE Cluster Tests with Netweaver on AWSThis is an example of tests to be performed with a SLES HAE HANA cluster.

Anyone will want to execute these tests before going into production.

| No. | Topic | Expected behavior |

|---|---|---|

| 1.0 | Set a node on standby/offline Set a node on standby by means of Pacemaker Cluster Tools (“crm node standby”). |

The cluster stops all managed resources on the standby node (master resources will be migrated / slave resources will just stop) |

| 1.1 | Set <nodenameA> to standby. |

Time until all managed resources were stopped / migrated to the other node: XX sec |

| 1.2 | Set <nodenameB> to standby | Time until all managed resources were stopped / migrated to the other node: XX sec |

| 2.0 | Switch off cluster node A Power-off the EC2 instance (hard / instant stop of the VM). |

The cluster notices that a member node is down. The remaining node makes a STONITH attempt to verify that the lost member is really offline. If STONITH is confirmed the remaining node takes over all resources. |

| 2.1 | Failover time of ASCS / HANA primary | XXX sec. |

| 3 | Switch off cluster node B Power-off the EC2 instance (hard / instant stop of the VM). |

The cluster notices that a member node is down. The remaining node makes a STONITH attempt to verify that the lost member is really offline. If STONITH is confirmed the remaining node takes over all resources. |

| 3.1 | Failover time of ASCS / HANA primary | XXX sec. |

| 4 | un-plug network connection (Split Brain) The cluster communication over the network is down. |

Both nodes detect the split brain scenario and try to fence each other (using the AWS STONITH agent). One node shuts down – the other will take over all resources Failovertime: XXX sec |

| 5 |

Failure (crash) of ASCS instance

ps -ef | grep ASCS | awk ‘{print $2}’ | xargs kill -9

|

The cluster notices the problem and promotes the ERS instance to ASCS while keeping all locks from the ENQ replication table. ASCS Failover time: XXX sec |

| 6 |

Failure of ERS instance

ps –ef | grep ERS | awk ‘{print $2}’ | xargs kill -9

|

The cluster notices the problem and restarts the ERS instance.

Time until ERS got restarted on same node: XX sec |

| 7 | Failure of HANA primary |

Time until HANA DB is available again: XXX sec |

| 8 | Failure of corosync Kill corosync cluster deamon “kill -9 “ on one node. |

The node without corosync is fenced by the remaining node (since it appears down). The remaining node makes a STONITH attempt to verify that the lost member is really offline. If STONITH is confirmed the remaining node takes over all resources. Failover of all managed resources: xxx sec |

Keep logfiles of all relevant resources to prove functionality. For instance after ASCS failover keep a copy of /usr/<SID>/ASCS<nr>/work/dev_enqserver. This logfile should list that an ENQ replication table was found in memory and that all locks got copied into the new ENQ table. Customers may request to aquire ENQ locks before the failover test and then check the status of those locks after successful failover (please document with screenshots of SM12 on both nodes before and after failover).

Keep corosync / cluster log of all actions taken during failover tests.

Ask customer for additional failover tests / requirements / scenarios he would like to cover.

Have customer sign the protocol (!) acknowledging that all tested failover scenarios worked as expected.

Remind customer to regularly re-test all failover scenarios if SAP / OS / cluster configuration changed or patches were applied.

- 2139 views

Testing SLES clusters with SAP HANA Database

Testing SLES clusters with SAP HANA DatabaseThe following three tests should be done before a HANA DB cluster is taken into production.

The tests will use all configured components.

- 1399 views

Primary HANA servers becomes unavailable

Primary HANA servers becomes unavailableSimulated Failures

- Instance failures. The primary HANA instance is crashed or not anymore reachable through the network

- Availability zone failure.

Components getting tested

- EC2 stoneith agent

- HANA agent

- Overlay IP agent

- Optional: Route 53 agent if it is configured

Approach

- Have a correctly working HANA DB cluster

- Shutdown eth0 on the instance to isolate

- The cluster will shutdown the node

- The cluster will failover the HANA database

- The cluster will not restart the failed node

Intial Configuration

Check whether the overlay IP address gets hosted on the interface eth0 on the first node:

hana01:/var/log # ip address list eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq state UP group default qlen 1000

link/ether 02:ca:c9:ca:a6:52 brd ff:ff:ff:ff:ff:ff

inet 10.0.1.115/24 brd 10.0.1.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.10.21/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::ca:c9ff:feca:a652/64 scope link

valid_lft forever preferred_lft forever

Check the cluster status as super user with the command crm status:

hana01:/var/log # crm status

Stack: corosync

Current DC: hana02 (version 1.1.15-21.1-e174ec8) - partition with quorum

Last updated: Tue Sep 11 12:37:53 2018

Last change: Tue Sep 11 12:37:53 2018 by root via crm_attribute on hana012 nodes configured

6 resources configuredOnline: [ hana01 hana02 ]

Full list of resources:

res_AWS_STONITH (stonith:external/ec2): Started hana01

res_AWS_IP (ocf::heartbeat:aws-vpc-move-ip): Started hana01

Clone Set: cln_SAPHanaTopology_HDB_HDB00 [rsc_SAPHanaTopology_HDB_HDB00]

Started: [ hana01 hana02 ]

Master/Slave Set: msl_SAPHana_HDB_HDB00 [rsc_SAPHana_HDB_HDB00]

Masters: [ hana01 ]

Slaves: [ hana02 ]

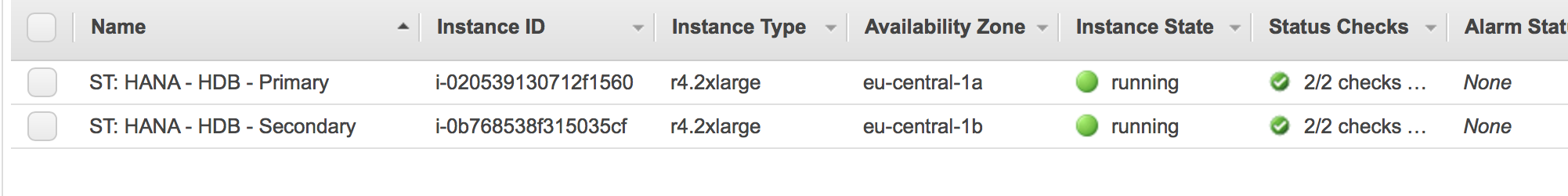

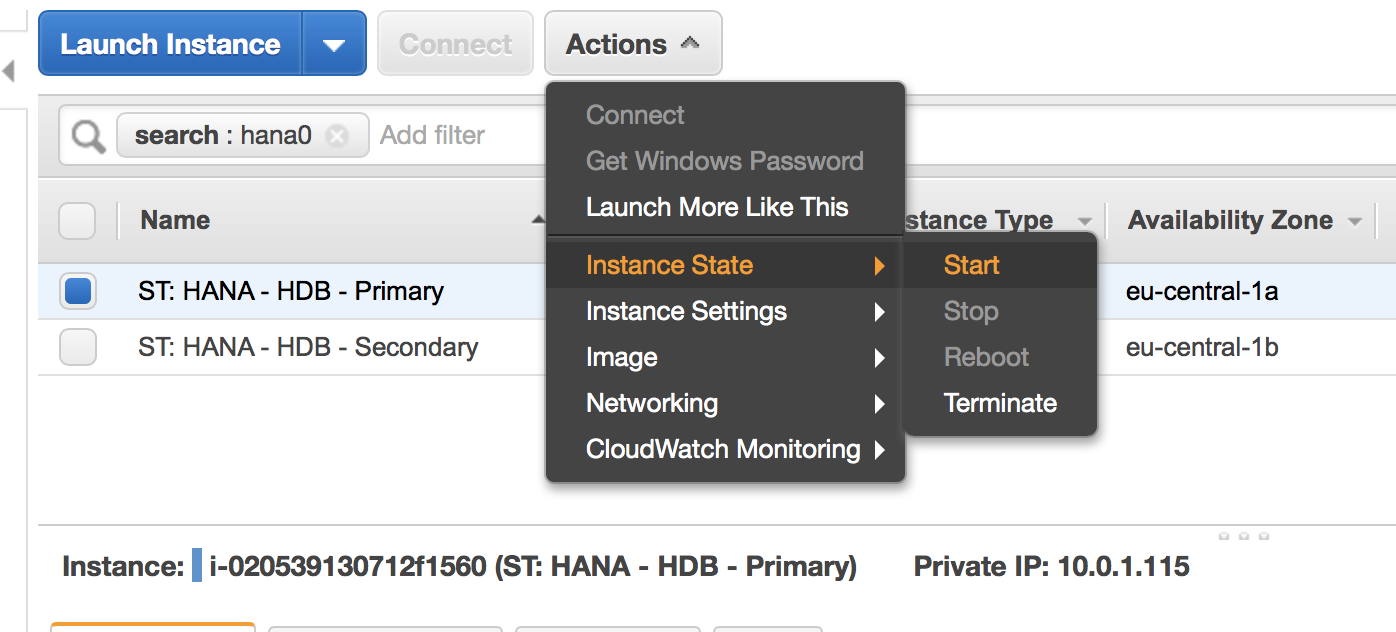

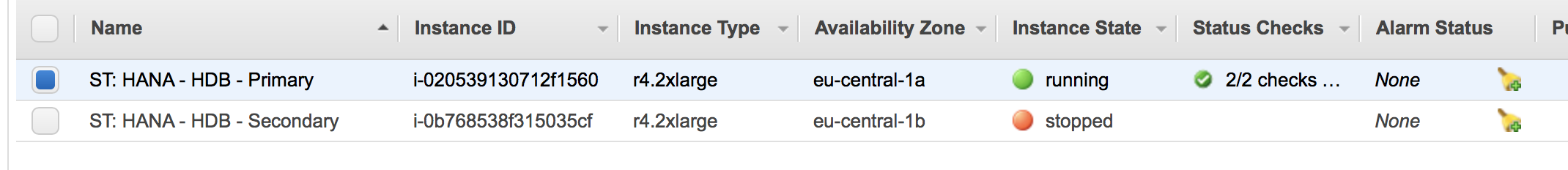

The AWS console shows that both nodes are running:

Damage the Instance

There are two ways to "damage" an instance

Corrupt Kernel

Become super user on the master HANA node.

Issue the command:

echo 'b' > /proc/sysrq-trigger

Isolate Instance

Become super user on the master HANA node.

Issue the command:

$ ifdown eth0

The current session will now hang. The system will not be able to communicate with the network anymore.

SUSE has a recommendation to do the isolation with firewalls and IP tables.

Monitor Fail Over

Expect the following in a correct working cluster:

- The second node will fence the first node. This means it will force a shutdown through AWS CLI commands

- The first node will be stopped

- The second node will take over the Overlay IP address and it will host the Hana database.

The cluster will now switch the master node and the slave node.

Monitor progress from the healthy node!

The first node gets reported being offline:

hana02:/home/ec2-user # SAPHanaSR-showAttr

Global cib-time

--------------------------------

global Wed Sep 19 13:18:21 2018

Hosts clone_state lpa_hdb_lpt node_state op_mode remoteHost roles score site srmode sync_state version vhost

-----------------------------------------------------------------------------------------------------------------------------------------------------------

hana01 1537362888 offline logreplay hana02 WDF sync hana01

hana02 PROMOTED 1537363101 online logreplay hana01 4:S:master1:master:worker:master 100 ROT sync SOK 2.00.030.00.1522209842 hana02hana02:/home/ec2-user # crm_mon -1rfn

Stack: corosync

Current DC: hana02 (version 1.1.15-21.1-e174ec8) - partition with quorum

Last updated: Wed Sep 19 13:18:52 2018

Last change: Wed Sep 19 13:18:21 2018 by root via crm_attribute on hana022 nodes configured

6 resources configuredNode hana01: OFFLINE

Node hana02: online

rsc_SAPHana_HDB_HDB00 (ocf::suse:SAPHana): Slave

rsc_SAPHanaTopology_HDB_HDB00 (ocf::suse:SAPHanaTopology): Started

res_AWS_IP (ocf::heartbeat:aws-vpc-move-ip): StartedInactive resources:

res_AWS_STONITH (stonith:external/ec2): Stopped

Clone Set: cln_SAPHanaTopology_HDB_HDB00 [rsc_SAPHanaTopology_HDB_HDB00]

Started: [ hana02 ]

Stopped: [ hana01 ]

Master/Slave Set: msl_SAPHana_HDB_HDB00 [rsc_SAPHana_HDB_HDB00]

Slaves: [ hana02 ]

Stopped: [ hana01 ]Migration Summary:

* Node hana02:

res_AWS_STONITH: migration-threshold=5000 fail-count=1 last-failure='Wed Sep 19 13:18:00 2018'Failed Actions:

* res_AWS_STONITH_monitor_120000 on hana02 'unknown error' (1): call=-1, status=Timed Out, exitreason='none',

last-rc-change='Wed Sep 19 13:18:00 2018', queued=0ms, exec=0ms

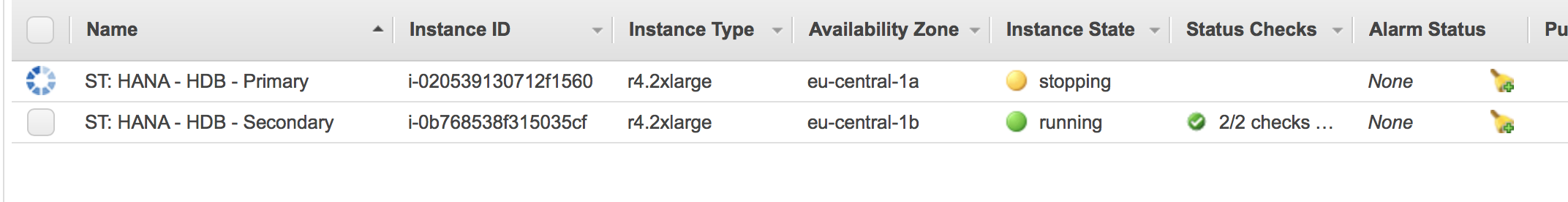

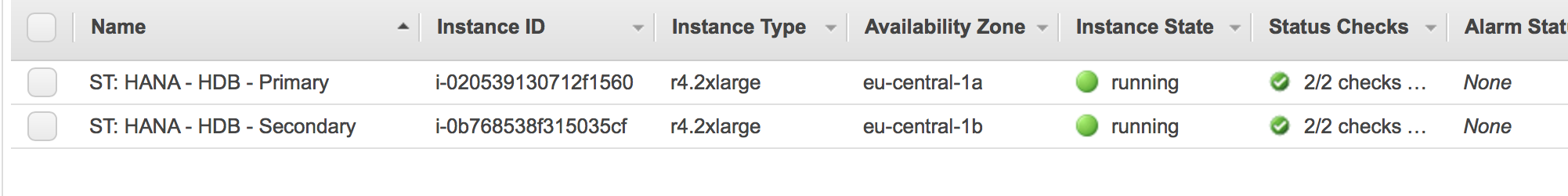

The AWS console will now show that the second node has been fencing the first node. It gets shut down:

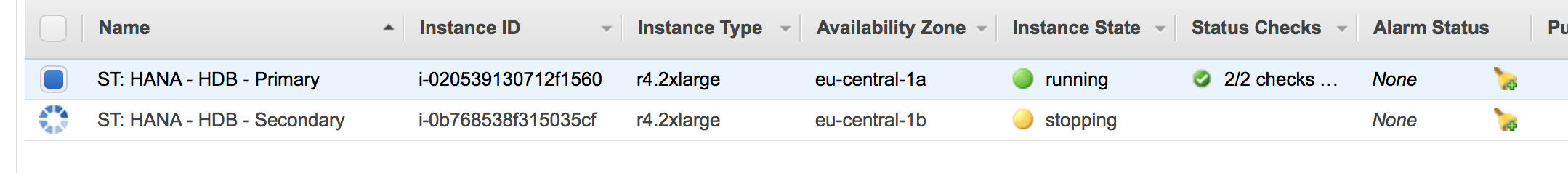

The second node will wait until the first node is shut down. The AWS console will look like:

The cluster will now promote the instance on the second node to be the primary instance:

hana02:/home/ec2-user # SAPHanaSR-showAttr

Global cib-time

--------------------------------

global Wed Sep 19 13:19:14 2018

Hosts clone_state lpa_hdb_lpt node_state op_mode remoteHost roles score site srmode sync_state version vhost

-----------------------------------------------------------------------------------------------------------------------------------------------------------

hana01 1537362888 offline logreplay hana02 WDF sync hana01

hana02 PROMOTED 1537363154 online logreplay hana01 4:P:master1:master:worker:master 100 ROT sync PRIM 2.00.030.00.1522209842 hana02

The cluster status will be the following:

hana02:/home/ec2-user # crm_mon -1rfn

Stack: corosync

Current DC: hana02 (version 1.1.15-21.1-e174ec8) - partition with quorum

Last updated: Wed Sep 19 13:19:16 2018

Last change: Wed Sep 19 13:19:14 2018 by root via crm_attribute on hana022 nodes configured

6 resources configuredNode hana01: OFFLINE

Node hana02: online

rsc_SAPHana_HDB_HDB00 (ocf::suse:SAPHana): Master

res_AWS_STONITH (stonith:external/ec2): Started

rsc_SAPHanaTopology_HDB_HDB00 (ocf::suse:SAPHanaTopology): Started

res_AWS_IP (ocf::heartbeat:aws-vpc-move-ip): StartedInactive resources:

Clone Set: cln_SAPHanaTopology_HDB_HDB00 [rsc_SAPHanaTopology_HDB_HDB00]

Started: [ hana02 ]

Stopped: [ hana01 ]

Master/Slave Set: msl_SAPHana_HDB_HDB00 [rsc_SAPHana_HDB_HDB00]

Masters: [ hana02 ]

Stopped: [ hana01 ]Migration Summary:

* Node hana02:

res_AWS_STONITH: migration-threshold=5000 fail-count=1 last-failure='Wed Sep 19 13:18:00 2018'Failed Actions:

* res_AWS_STONITH_monitor_120000 on hana02 'unknown error' (1): call=-1, status=Timed Out, exitreason='none',

last-rc-change='Wed Sep 19 13:18:00 2018', queued=0ms, exec=0ms

Check whether the overlay IP address gets hosted on the eth0 interface of the second node. Example:

hana02:/tmp # ip address list eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq state UP group default qlen 1000

link/ether 06:4f:41:53:ff:76 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.129/24 brd 10.0.2.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.10.21/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::44f:41ff:fe53:ff76/64 scope link

valid_lft forever preferred_lft forever

Last step: Clean up the message on the second node:

hana02:/home/ec2-user # crm resource cleanup res_AWS_STONITH hana02

Cleaning up res_AWS_STONITH on hana02, removing fail-count-res_AWS_STONITH

Waiting for 1 replies from the CRMd. OK

hana02:/home/ec2-user # crm status

Stack: corosync

Current DC: hana02 (version 1.1.15-21.1-e174ec8) - partition with quorum

Last updated: Wed Sep 19 13:20:44 2018

Last change: Wed Sep 19 13:20:34 2018 by hacluster via crmd on hana022 nodes configured

6 resources configuredOnline: [ hana02 ]

OFFLINE: [ hana01 ]Full list of resources:

res_AWS_STONITH (stonith:external/ec2): Started hana02

res_AWS_IP (ocf::heartbeat:aws-vpc-move-ip): Started hana02

Clone Set: cln_SAPHanaTopology_HDB_HDB00 [rsc_SAPHanaTopology_HDB_HDB00]

Started: [ hana02 ]

Stopped: [ hana01 ]

Master/Slave Set: msl_SAPHana_HDB_HDB00 [rsc_SAPHana_HDB_HDB00]

Masters: [ hana02 ]

Stopped: [ hana01 ]

Recovering the Cluster

Restart your stopped node. See:

Check whether the cluster services get started

Check whether the first node becomes a replicating server

See:

hana02:/home/ec2-user # SAPHanaSR-showAttr;

Global cib-time

--------------------------------

global Wed Sep 19 13:57:41 2018

Hosts clone_state lpa_hdb_lpt node_state op_mode remoteHost roles score site srmode sync_state version vhost

-----------------------------------------------------------------------------------------------------------------------------------------------------------

hana01 DEMOTED 30 online logreplay hana02 4:S:master1:master:worker:master 100 WDF sync SOK 2.00.030.00.1522209842 hana01

hana02 PROMOTED 1537365461 online logreplay hana01 4:P:master1:master:worker:master 150 ROT sync PRIM 2.00.030.00.1522209842 hana02

- 2608 views

Secondary HANA server becomes unavailable

Secondary HANA server becomes unavailableSimulated Failures

- Instance failures. The secondary HANA instance is crashed or not anymore reachable through the network

- Availability zone failure.

Components getting tested

- EC2 stoneith agent

- HANA agent

- Overlay IP agent

- Optional: Route 53 agent if it is configured

Approach

- Have a correctly working HANA DB cluster

- Shutdown eth0 on the secondary server to isolate the server

- The cluster will shutdown the the secondary node

- The cluster will keep the primary node running without replication

- The cluster will not restart the failed node

Intial Configuration

Check whether the overlay IP address gets hosted on the interface eth0 on the first node:

hana01:/var/log # ip address list eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq state UP group default qlen 1000

link/ether 02:ca:c9:ca:a6:52 brd ff:ff:ff:ff:ff:ff

inet 10.0.1.115/24 brd 10.0.1.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.10.21/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::ca:c9ff:feca:a652/64 scope link

valid_lft forever preferred_lft forever

Check the cluster status as super user with the command crm status:

hana01:/var/log # crm status

Stack: corosync

Current DC: hana02 (version 1.1.15-21.1-e174ec8) - partition with quorum

Last updated: Tue Sep 11 12:37:53 2018

Last change: Tue Sep 11 12:37:53 2018 by root via crm_attribute on hana012 nodes configured

6 resources configuredOnline: [ hana01 hana02 ]

Full list of resources:

res_AWS_STONITH (stonith:external/ec2): Started hana01

res_AWS_IP (ocf::heartbeat:aws-vpc-move-ip): Started hana01

Clone Set: cln_SAPHanaTopology_HDB_HDB00 [rsc_SAPHanaTopology_HDB_HDB00]

Started: [ hana01 hana02 ]

Master/Slave Set: msl_SAPHana_HDB_HDB00 [rsc_SAPHana_HDB_HDB00]

Masters: [ hana01 ]

Slaves: [ hana02 ]

Status of HANA replication:

hana01:/home/ec2-user # SAPHanaSR-showAttrGlobal cib-time

--------------------------------

global Wed Sep 19 14:23:11 2018

Hosts clone_state lpa_hdb_lpt node_state op_mode remoteHost roles score site srmode sync_state version vhost

-----------------------------------------------------------------------------------------------------------------------------------------------------------

hana01 PROMOTED 1537366980 online logreplay hana02 4:P:master1:master:worker:master 150 WDF sync PRIM 2.00.030.00.1522209842 hana01

hana02 DEMOTED 30 online logreplay hana01 4:S:master1:master:worker:master 100 ROT sync SOK 2.00.030.00.1522209842 hana02

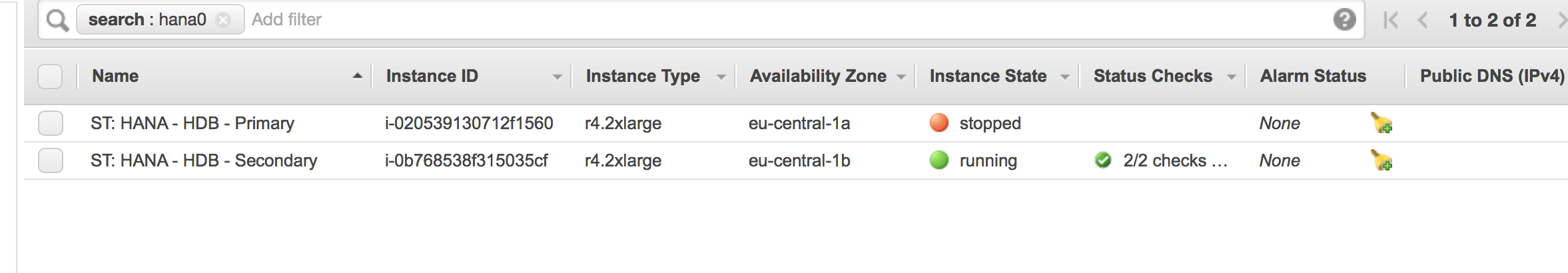

The AWS console shows that both nodes are running:

Damage the Instance

There are two ways to "damage" an instance

Corrupt Kernel

Become super user on the secondary HANA node.

Issue the command:

echo 'b' > /proc/sysrq-trigger

Isolate secondary Instance

Become super user on the secondary HANA node.

Issue the command:

$ ifdown eth0

The current session will now hang. The system will not be able to communicate with the network anymore.

SUSE has a recommendation to do the isolation with firewalls and IP tables.

Monitor Fail Over

Expect the following in a correct working cluster:

-

The first node will fence the second node. This means it will force a shutdown through AWS CLI commands

-

The second node will be stopped

-

The first node will remain the master node of the HANA database.

-

There is no more replication!

Monitor progress from the master node!

The first node gets reported being offline:

hana01:/home/ec2-user # SAPHanaSR-showAttr

Global cib-time

--------------------------------

global Wed Sep 19 14:24:13 2018

Hosts clone_state lpa_hdb_lpt node_state op_mode remoteHost roles score site srmode sync_state version vhost

-----------------------------------------------------------------------------------------------------------------------------------------------------------

hana01 PROMOTED 1537367044 online logreplay hana02 4:P:master1:master:worker:master 150 WDF sync PRIM 2.00.030.00.1522209842 hana01

hana02 DEMOTED 30 offline logreplay hana01 4:S:master1:master:worker:master 100 ROT sync SOK 2.00.030.00.1522209842 hana02

The cluster will figure out that the secondary node is in an unclean state

hana01:/home/ec2-user # crm_mon -1rfn

Stack: corosync

Current DC: hana01 (version 1.1.15-21.1-e174ec8) - partition with quorum

Last updated: Wed Sep 19 14:24:26 2018

Last change: Wed Sep 19 14:24:13 2018 by root via crm_attribute on hana01

2 nodes configured

6 resources configuredNode hana01: online

rsc_SAPHana_HDB_HDB00 (ocf::suse:SAPHana): Master

res_AWS_STONITH (stonith:external/ec2): Started

rsc_SAPHanaTopology_HDB_HDB00 (ocf::suse:SAPHanaTopology): Started

res_AWS_IP (ocf::heartbeat:aws-vpc-move-ip): Started

Node hana02: UNCLEAN (offline)

res_AWS_STONITH (stonith:external/ec2): Started

rsc_SAPHanaTopology_HDB_HDB00 (ocf::suse:SAPHanaTopology): Started

rsc_SAPHana_HDB_HDB00 (ocf::suse:SAPHana): SlaveInactive resources:

Migration Summary:

* Node hana01:

The AWS console will now show that the master node has been fencing the secondary node node. It gets shut down:

The master node will wait until the secondary node is shut down. The AWS console will look like:

The cluster will now reconfigure it HANA configuration. The cluster knows that the node is offline and replication has been stopped:

hana01:/home/ec2-user # SAPHanaSR-showAttr

Global cib-time

--------------------------------

global Wed Sep 19 14:24:13 2018

Hosts clone_state lpa_hdb_lpt node_state op_mode remoteHost roles score site srmode sync_state version vhost

-----------------------------------------------------------------------------------------------------------------------------------------------------------

hana01 PROMOTED 1537367044 online logreplay hana02 4:P:master1:master:worker:master 150 WDF sync PRIM 2.00.030.00.1522209842 hana01

hana02 30 offline logreplay hana01 ROT sync hana02

The cluster status is the following:

hana01:/home/ec2-user # crm_mon -1rfn

Stack: corosync

Current DC: hana01 (version 1.1.15-21.1-e174ec8) - partition with quorum

Last updated: Wed Sep 19 14:27:05 2018

Last change: Wed Sep 19 14:24:13 2018 by root via crm_attribute on hana01

2 nodes configured

6 resources configuredNode hana01: online

rsc_SAPHana_HDB_HDB00 (ocf::suse:SAPHana): Master

res_AWS_STONITH (stonith:external/ec2): Started

rsc_SAPHanaTopology_HDB_HDB00 (ocf::suse:SAPHanaTopology): Started

res_AWS_IP (ocf::heartbeat:aws-vpc-move-ip): Started

Node hana02: OFFLINEInactive resources:

Clone Set: cln_SAPHanaTopology_HDB_HDB00 [rsc_SAPHanaTopology_HDB_HDB00]

Started: [ hana01 ]

Stopped: [ hana02 ]

Master/Slave Set: msl_SAPHana_HDB_HDB00 [rsc_SAPHana_HDB_HDB00]

Masters: [ hana01 ]

Stopped: [ hana02 ]Migration Summary:

* Node hana01:

res_AWS_STONITH: migration-threshold=5000 fail-count=1 last-failure='Wed Sep 19 14:26:17 2018'Failed Actions:

* res_AWS_STONITH_monitor_120000 on hana01 'unknown error' (1): call=-1, status=Timed Out, exitreason='none',

last-rc-change='Wed Sep 19 14:26:17 2018', queued=0ms, exec=0ms

Check whether the overlay IP address gets hosted on the eth0 interface of the master node. Example:

hana01:/home/ec2-user # ip address list eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq state UP group default qlen 1000

link/ether 02:ca:c9:ca:a6:52 brd ff:ff:ff:ff:ff:ff

inet 10.0.1.115/24 brd 10.0.1.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.10.21/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::ca:c9ff:feca:a652/64 scope link

valid_lft forever preferred_lft forever

Recovering the Cluster

-

Restart your stopped node.

-

Check whether the cluster services get started

-

Check whether the first node becomes a replicating server

See:

hana01:/home/ec2-user # SAPHanaSR-showAttr

Global cib-time

--------------------------------

global Wed Sep 19 14:59:15 2018

Hosts clone_state lpa_hdb_lpt node_state op_mode remoteHost roles score site srmode sync_state version vhost

-----------------------------------------------------------------------------------------------------------------------------------------------------------

hana01 PROMOTED 1537369155 online logreplay hana02 4:P:master1:master:worker:master 150 WDF sync PRIM 2.00.030.00.1522209842 hana01

hana02 DEMOTED 30 online logreplay hana01 4:S:master1:master:worker:master 100 ROT sync SOK 2.00.030.00.1522209842 hana02

- 2159 views

Takeover a HANA DB through killing the Database

Takeover a HANA DB through killing the DatabaseSimulated Failures

- Database failures. The database is not working as expected

Components getting tested

- HANA agent

- Overlay IP agent

- Optional: Route 53 agent if it is configured

Approach

- Have a correctly working HANA DB cluster

- Kill database

- The cluster will failover the database without fencing the node

Intial Configuration

Check whether the overlay IP address gets hosted on the interface eth0 on the first node:

hana01:/var/log # ip address list eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq state UP group default qlen 1000

link/ether 02:ca:c9:ca:a6:52 brd ff:ff:ff:ff:ff:ff

inet 10.0.1.115/24 brd 10.0.1.255 scope global eth0

valid_lft forever preferred_lft forever

inet 192.168.10.21/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::ca:c9ff:feca:a652/64 scope link

valid_lft forever preferred_lft forever

Check the cluster status as super user with the command crm status:

hana01:/var/log # crm status

Stack: corosync

Current DC: hana02 (version 1.1.15-21.1-e174ec8) - partition with quorum

Last updated: Tue Sep 11 12:37:53 2018

Last change: Tue Sep 11 12:37:53 2018 by root via crm_attribute on hana012 nodes configured

6 resources configuredOnline: [ hana01 hana02 ]

Full list of resources:

res_AWS_STONITH (stonith:external/ec2): Started hana01

res_AWS_IP (ocf::heartbeat:aws-vpc-move-ip): Started hana01

Clone Set: cln_SAPHanaTopology_HDB_HDB00 [rsc_SAPHanaTopology_HDB_HDB00]

Started: [ hana01 hana02 ]

Master/Slave Set: msl_SAPHana_HDB_HDB00 [rsc_SAPHana_HDB_HDB00]

Masters: [ hana01 ]

Slaves: [ hana02 ]

Kill Database

hana01 is the node with the leading HANA database.

The failover will only work if the re-syncing of the slave node is completed. Check this through the command . Example:

hana02:/tmp # SAPHanaSR-showAttr

Global cib-time

--------------------------------

global Tue Sep 11 09:11:16 2018

Hosts clone_state lpa_hdb_lpt node_state op_mode remoteHost roles score site srmode sync_state version vhost

-----------------------------------------------------------------------------------------------------------------------------------------------------------

hana01 PROMOTED 1536657075 online logreplay hana02 4:P:master1:master:worker:master 150 WDF sync PRIM 2.00.030.00.1522209842 hana01

hana02 DEMOTED 30 online logreplay hana01 4:S:master1:master:worker:master 100 ROT sync SOK 2.00.030.00.1522209842 hana02

The synchronisation state (colum sync_state) of the slave node has to be SOK.

Become HANA DB user and execute the following command:

hdbadm@hana01:/usr/sap/HDB/HDB00> HDB kill

killing HDB processes:

kill -9 462 /usr/sap/HDB/HDB00/hana01/trace/hdb.sapHDB_HDB00 -d -nw -f /usr/sap/HDB/HDB00/hana01/daemon.ini pf=/usr/sap/HDB/SYS/profile/HDB_HDB00_hana01

kill -9 599 hdbnameserver

kill -9 826 hdbcompileserver

kill -9 828 hdbpreprocessor

kill -9 1036 hdbindexserver -port 30003

kill -9 1038 hdbxsengine -port 30007

kill -9 1372 hdbwebdispatcher

kill orphan HDB processes:

kill -9 599 [hdbnameserver] <defunct>

kill -9 1036 [hdbindexserver] <defunct>

Monitoring Fail Over

The cluster will now switch the master node and the slave node. The failover will be completed when the HANA database on the first node has been synchronized as well

hana02:/tmp # SAPHanaSR-showAttr

Global cib-time

--------------------------------

global Tue Sep 11 09:20:38 2018

Hosts clone_state lpa_hdb_lpt node_state op_mode remoteHost roles score site srmode sync_state version vhost

---------------------------------------------------------------------------------------------------------------------------------------------------------------

hana01 DEMOTED 30 online logreplay hana02 4:S:master1:master:worker:master -INFINITY WDF sync SOK 2.00.030.00.1522209842 hana01

hana02 PROMOTED 1536657638 online logreplay hana01 4:P:master1:master:worker:master 150 ROT sync PRIM 2.00.030.00.1522209842 hana02

Check the cluster status as super user with the command cluster status. Example

hana02:/tmp # crm status

Stack: corosync